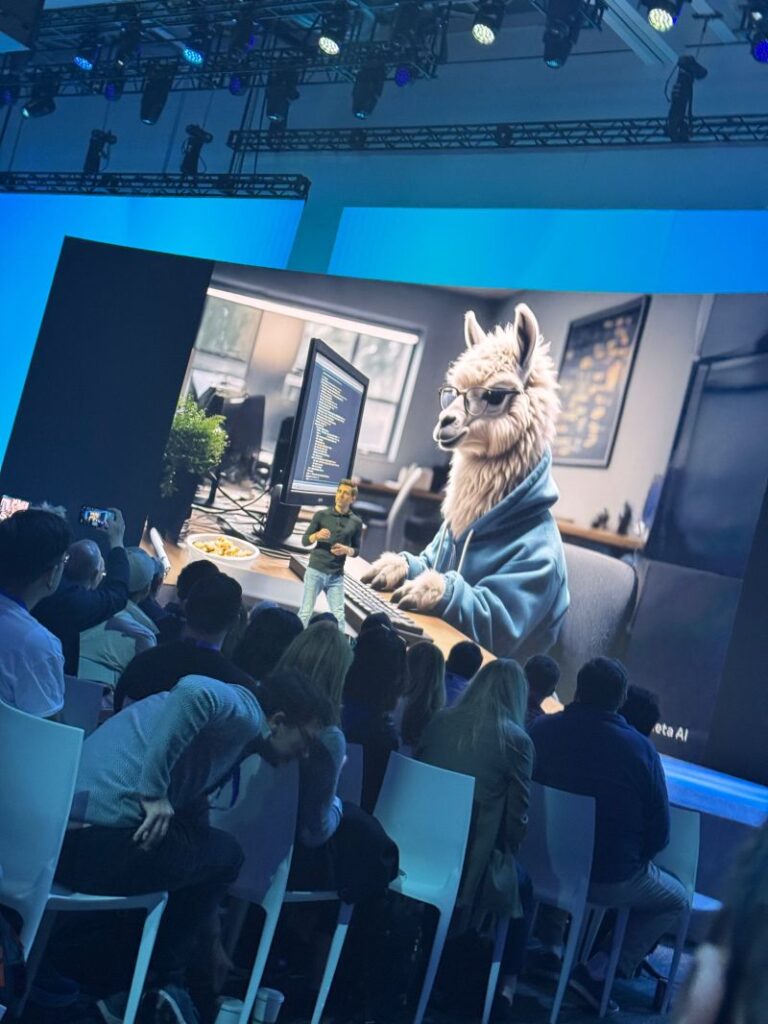

Big announcements out of LlamaCon 2025 today — a significant leap forward for the open-source AI community.

Meta unveiled the new Llama API, now supporting local fine-tuning, multimodal input, and mixture-of-experts architectures. This means we can build smaller, faster, and more efficient models, trained for specific real-world contexts — without the heavy infrastructure of centralized AI systems.

Alongside the MetaAI app, which enables new multimodal interactions, these updates point toward a more distributed future for artificial intelligence: one where AI becomes a local companion rather than a distant service.

Why This Matters for Learning

At ASU Next Lab, we see this as a foundational moment. The next wave of educational innovation won’t come from ever-larger models, but from what we might call “little llamas” — compact, fine-tuned AI models designed for purpose, place, and people.

These new capabilities align directly with our EDge AI initiative:

- Running AI locally on low-cost, low-power devices

- Supporting multimodal learning in low-connectivity settings

- Enabling adaptive content for students and teachers without the cloud

This is the infrastructure for equitable, human-centered AI — where access isn’t determined by bandwidth or geography.

The Road Ahead

Frontier models will continue to push the boundaries of scale and capability. Still, the fundamental transformation lies in personalized, contextual, and community-driven AI systems — ones that empower local creativity, education, and problem-solving.

At Next Lab, we’re already experimenting with this future, using Meta’s Llama models to bring intelligent, offline learning tools to life.

The future of AI isn’t just faster or smarter — it’s more distributed, more adaptable, and more human.

Learn more:

📄 LlamaCon news: https://ai.meta.com/blog/llamacon-llama-news/

🌍 Explore EDge AI: https://nextlab.asu.edu/edge-ai/